In order to know how to use Screaming Frog in SEO, first, we need to understand what it is and how it works. This is important for creating a sitemap and boosting SEO and other processes. This article will discuss Screaming Frog and how it crawls websites.

What is Screaming Frog?

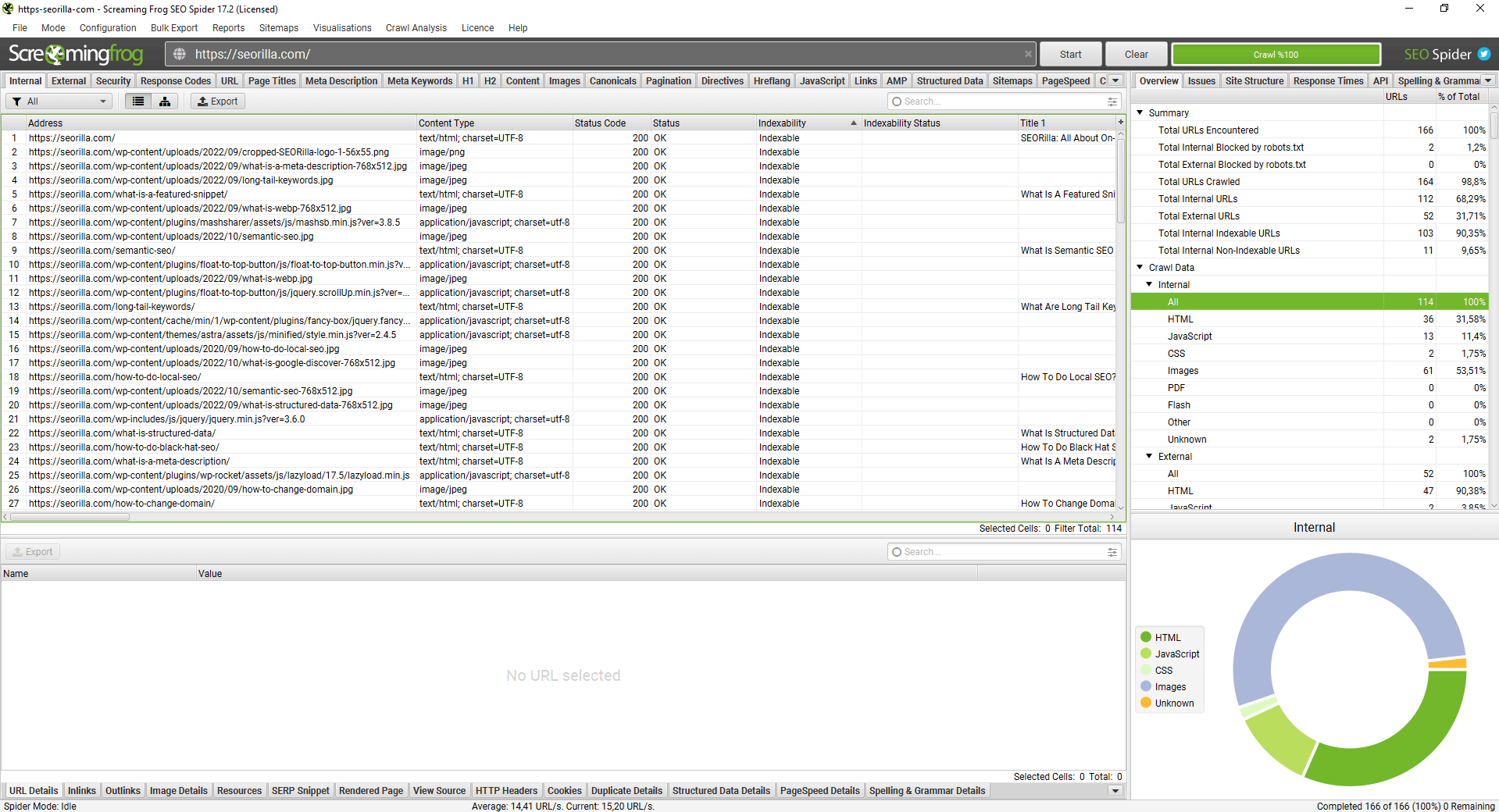

Screaming Frog is a desktop program used to crawl websites and collect data about them. It is a strong SEO audit tool that can crawl large websites. Many successful companies like Apple, Amazon, and Google use this tool. Without a tool, manual checking is risky since there is a chance that you can miss duplicate content, a redirect, and a meta refresh. This SEO spider allows you to export on-page elements such as meta description, page title, URL, and others. So it can be used as a foundation for SEO suggestions.

Screaming Frog finds broken links, duplicate content, and missing title tags that connect with Google Analytics audits, redirects, and so on. It collects data about the site and places it in a table.

How to Use a Screaming Frog to Crawl a Website

Crawling websites and collecting data requires large memory. When it comes to the Screaming Frog SEO spider uses a configurable hybrid engine to crawl large-scale websites. Because crawling a website is very important to collect data about potential issues of a website.

Screaming Frog’s default setup can track websites with less than 10,000 pages. But when it comes to large pages (3 million), you need to:

- Increase computer RAM.

- Increase Screaming Frog RAM availability.

- Increase Crawl Speed.

- Segment the Website into Crawlable Chunks.

- Don’t include unneeded URLs.

First, you need to complete the total site crawl. You can follow these steps to crawl a website:

- Click Spider on the configuration menu.

- Tap “crawl all subdomains” in the menu. It is possible to crawl Images, JavaScript, CSS, and External links to get a complete view of your site. You can leave unchecked media and scripts if you want a fast turnaround by checking only page and text elements.

- Start the crawl, wait until it is completed. It will crawl faster depending on the power of your computer.

- After the completion, click the Internal tab to filter your results by HTML. Then you can export it. You will be provided with all CSV data crawled. It will be sorted by HTML page that will help you to identify issues on a given page and fix them.

Why Is Screaming Frog not Crawling all URLs?

Screaming Frog is an effective tool for crawling websites and extracting data. But if it is not crawling all URLs, it won’t allow you to perform a quality SEO audit. There are many reasons why screaming Frog is not crawling all websites. The most common one is that maybe the website has been configured to block crawlers. These are the main reasons why Screaming Frog is not crawling all URLs.

- The site may be blocked by robots.txt.

Robots.txt may block Screaming Frog from crawling pages. In this case, you can configure the SEO spider to ignore robots.txt. Users use robots.txt to instruct web crawlers on what they are allowed to access. When a crawler tries to access a page disallowed in robots.txt, the webmaster does not want this page crawled. It is valid for on-page SEO elements as well. For example, website owners want to prevent crawlers from indexing sensitive information.

- The User-Agent May Be Blocked.

The User-Agent is a string of text that provides information about a browser, your device, and your operating system. That is why the website can change the way it behaves. You may change User-Agent to pretend to be a different browser. Still, some sites may block certain browsers.

- The Nofollow attribute

Nofollow links tell crawlers not to follow links. If links are set to nofollow on a page, Screaming Frog won’t be able to crawl the website.

- JavaScript Requirement

JavaScript is a programming language that is used to build interactive web pages. It can be used to track a user’s browsing activity. This is why some users decide to disable it. But it can lead to the issue of how the website is displayed.

How Do You Ignore Robots in Screaming Frog?

Sites in development can be blocked by robots.txt. So you need to make sure you ignore robots. You can use the ‘ignore robots.txt configuration. Then you can insert the staging site URL and crawl. You can switch off showing internal and external links blocked by robots.txt in the user interface. You need to do that in the robots.txt settings. On-page elements such as headings and meta titles will be open to crawling. Common directives are used to block all robots from all URLs. You can remove robots.txt by deleting it from the end of the subdomain on Screaming Frog URLs. This is how you ignore it.

Conclusion

In this article, we briefly explained how to use Screaming Frog in SEO. It is important to find broken links, duplicate content, and missing title tags and connect with Google Analytics.

You can use it to crawl large websites and collect data. However, it can’t crawl some URLs depending on their relationships with robots.txt and other technical issues. But you can ignore it by removing it from the URL subdomain or making all your pages open to crawling.

Frequently Asked Questions on Screaming Frog

How can I connect my Screaming Frog to Google Search Console?

Navigate to the Screaming Frog menu and find the API Access option under the ‘Configuration’ dropdown. You can select ‘Google Search Console’ in that menu. This window will let you go through the process of connecting to the Google Search Console account.

Is there an API in Screaming Frog?

The URL Inspection API was integrated into Screaming Frog SEO Spider. Since then, users have been allowed to pull in data for up to 2k URLs per property a day.

How can I download Screaming Frog?

On the Screaming Frog homepage, click Download under SEO spider and select your operating system.